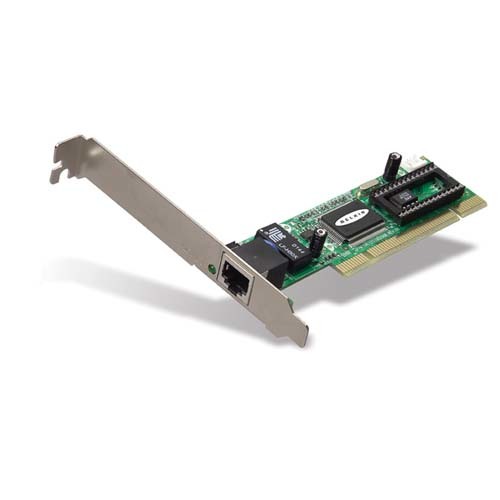

Network Adapters

NVIDIA ConnectX-5 – network adapter – PCIe 3.0 x16 – 100Gb Ethernet / 100Gb Infiniband QSFP28 x 1

NVIDIA ConnectX-5 – Network adapter – PCIe 3.0 x16 – 100Gb Ethernet / 100Gb Infiniband QSFP28 x 1

AED4,799.00

NVIDIA Mellanox ConnectX-5 adapters offer advanced hardware offloads to reduce CPU resource consumption and drive extremely high packet rates and throughput. This boosts data center infrastructure efficiency and provides the highest performance and most flexible solution for Web 2.0, Cloud, Data Analytics and Storage platforms.

| Device Type | Network adapter |

| Form Factor | Plug-in card |

| Interface (Bus) Type | PCI Express 3.0 x16 |

| PCI Specification Revision | PCIe 2.0, PCIe 3.0 |

| Networking | Ports |

| 100Gb Ethernet / 100Gb Infiniband QSFP28 x 1 |

| Connectivity Technology | Wired |

| Data Link Protocol | 100 Gigabit Ethernet, 100 Gigabit InfiniBand |

| Data Transfer Rate | 100 Gbps |

| Network / Transport Protocol | TCP/IP, UDP/IP, SMB, NFS |

| Features | QoS, SR-IOV, RoCE, InfiniBand EDR Link support, VXLAN, NVGRE, ASAP, NVMf Offloads, vSwitch Acceleration |

| Compliant Standards | IEEE 802.1Q, IEEE 802.1p, IEEE 802.3ad (LACP), IEEE 802.3ae, IEEE 802.3ap, IEEE 802.3az, IEEE 802.3ba, IEEE 802.1AX, IEEE 802.1Qbb, IEEE 802.1Qaz, IEEE 802.1Qau, IEEE 802.1Qbg, IEEE 1588v2, IEEE 802.3bj, IEEE 802.3bm, IEEE 802.3by, OCP 3.0, RoHS |

| Expansion / Connectivity | Interfaces |

| 1 x 100Gb Ethernet / 100Gb Infiniband – QSFP28 |

| Miscellaneous | Compliant Standards: RoHS |

| Software / System Requirements | OS Required |

| FreeBSD, Microsoft Windows, Red Hat Enterprise Linux, CentOS, VMware ESX |

Based on 0 reviews

Only logged in customers who have purchased this product may leave a review.

04 3550600

04 3550600 052 7036860

052 7036860 info@techsouq.com

info@techsouq.com

There are no reviews yet.